Limitations

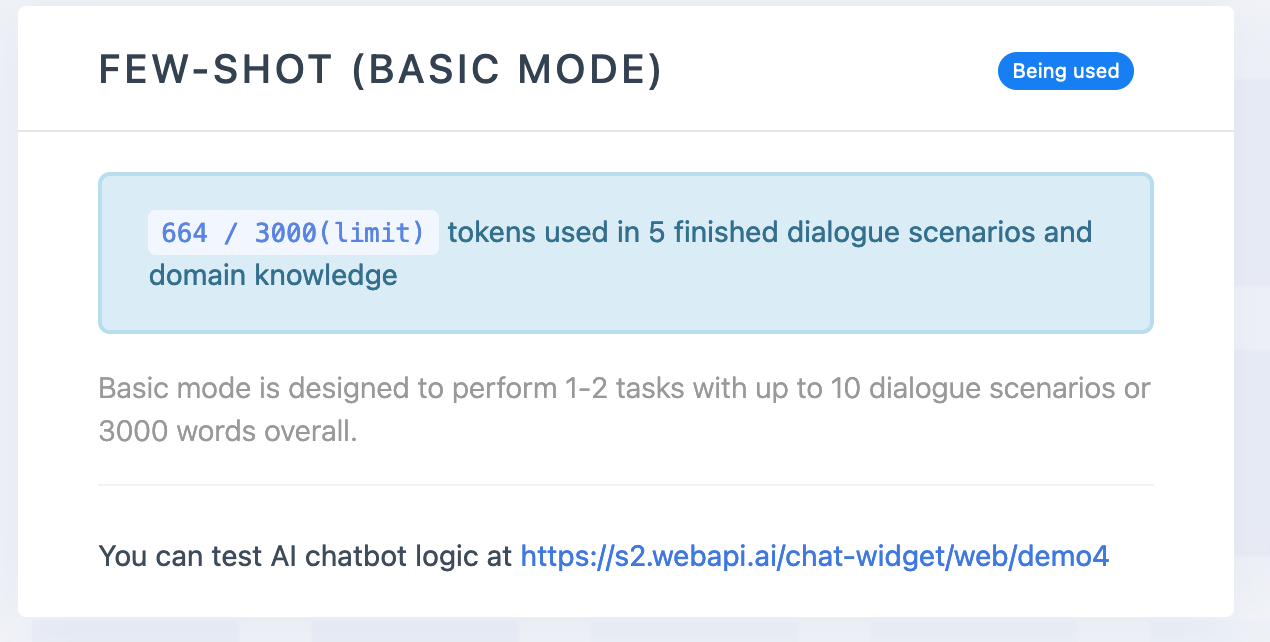

Since we use GPT-4 as our main AI model, there's a technical limitation in the number of tokens. Each request can use up to 5000-30,000 tokens shared between Domain Knowledge and Dialogue Scenarios (standard version) depending on the used model type. The number of used tokens is available on the "AI Settings" page. Learn more on the Pricings page.

What are tokens?

Tokens can be thought of as pieces of words. Here are some helpful rules of thumb for understanding tokens in terms of lengths:

- 1 token ~= 4 chars in English

- 1 token ~= ¾ words

- 100 tokens ~= 75 words

Did this answer your question?

We're glad you could find the answer you were looking for

Your feedback has been recorded. If you'd like to tell us how we can improve this content please fill out the form below.

Thank you for your feedback!